Research · 4 min read

A Multimodal Approach to Measuring Emotion

Discover how combining physiological measurements with video-based behavioral observation creates a comprehensive approach to measuring emotions in research studies.

Fundamentally, all behavioral, self-report, autonomic, hormonal, and imaging data are measuring the activity/output of biological systems at different levels of description.

After decades of emotion research, it is clear that no single measure is capable of providing a comprehensive metric of emotion. It is only through the combined integration of multiple measures that one can start to gain a clear picture of affect and its explanatory reach.

Nearly all prominent contemporary theories of emotion have the Autonomic Nervous System (ANS) at its core. We now know the brain structures responsible for controlling the sympathetic and parasympathetic branches of the ANS are the same structures responsible for regulating cognitive, affective, and behavioral responses. This means that emotions can be quantified by measuring physiological processes that are known to correlate with activity in these brain structures.

One notable advantage of measuring ANS function is that you gain insight into psychological states and neural processes while the participant is engagedin tasks without having to distract or disrupt them.

A study that combines video collection for behavioral outcomes and autonomic monitoring for emotionally driven physiological changes can be minimally invasive, allowing for a pure multimodal measure of emotion.

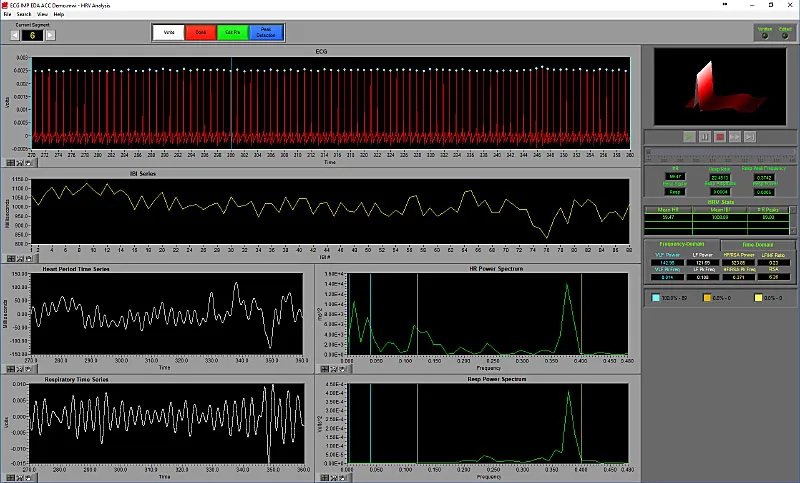

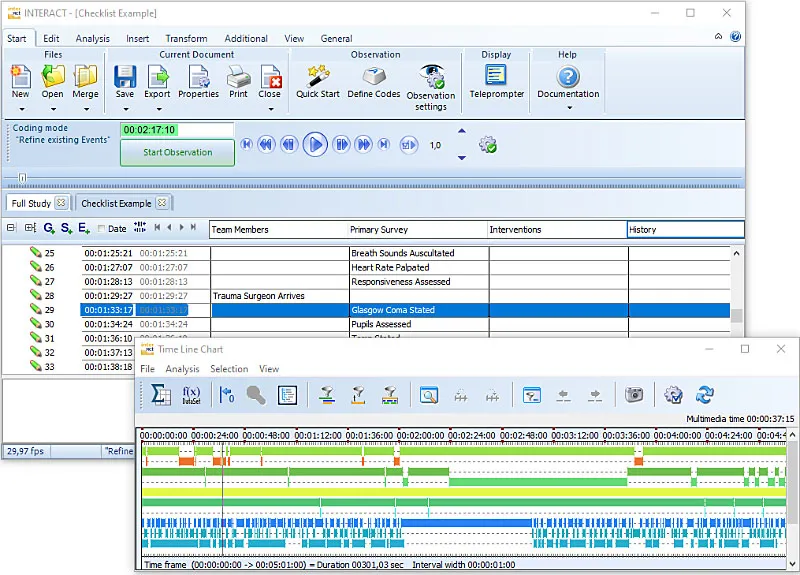

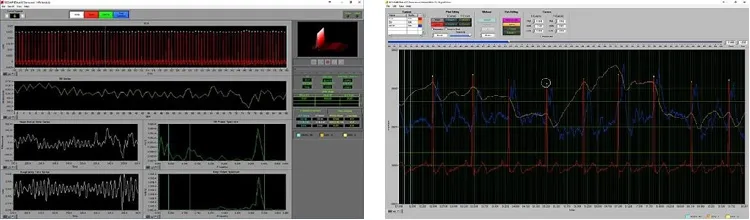

Heart Rate Variability (HRV) analysis in the MindWare Software

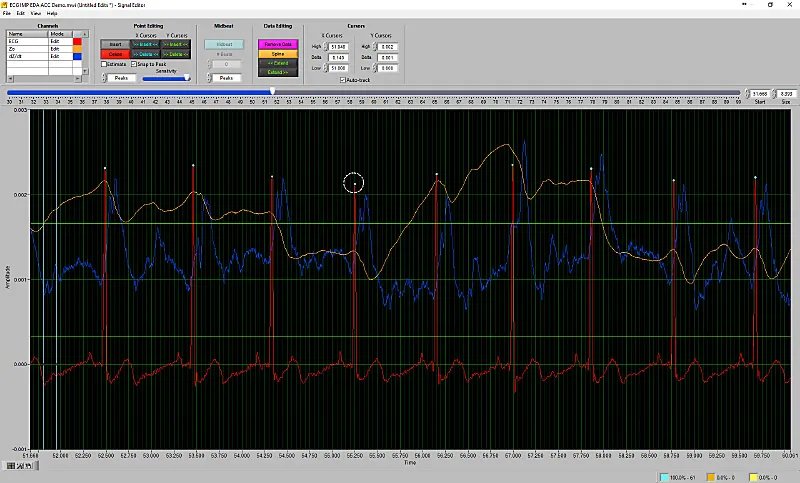

IMP editor in the MindWare Software

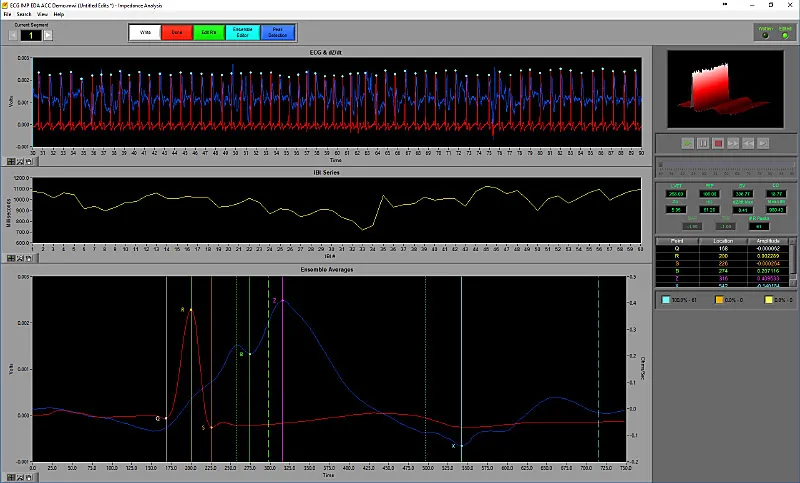

IMP analysis in the MindWare Software

Why MindWare

For nearly 20 years, MindWare Technologies has been at the forefront of technological development for measuring physiology with the purpose of decoding these emotional responses.

MindWare provides the tools for acquiring and analyzing popular metrics of sympathetic/parasympathetic nervous system activity including Heart Rate Variability (HRV), Cardiac Impedance, Skin Conductance (EDA), Electromyography, and more - all developed and firmly grounded in peer-reviewed research and techniques.

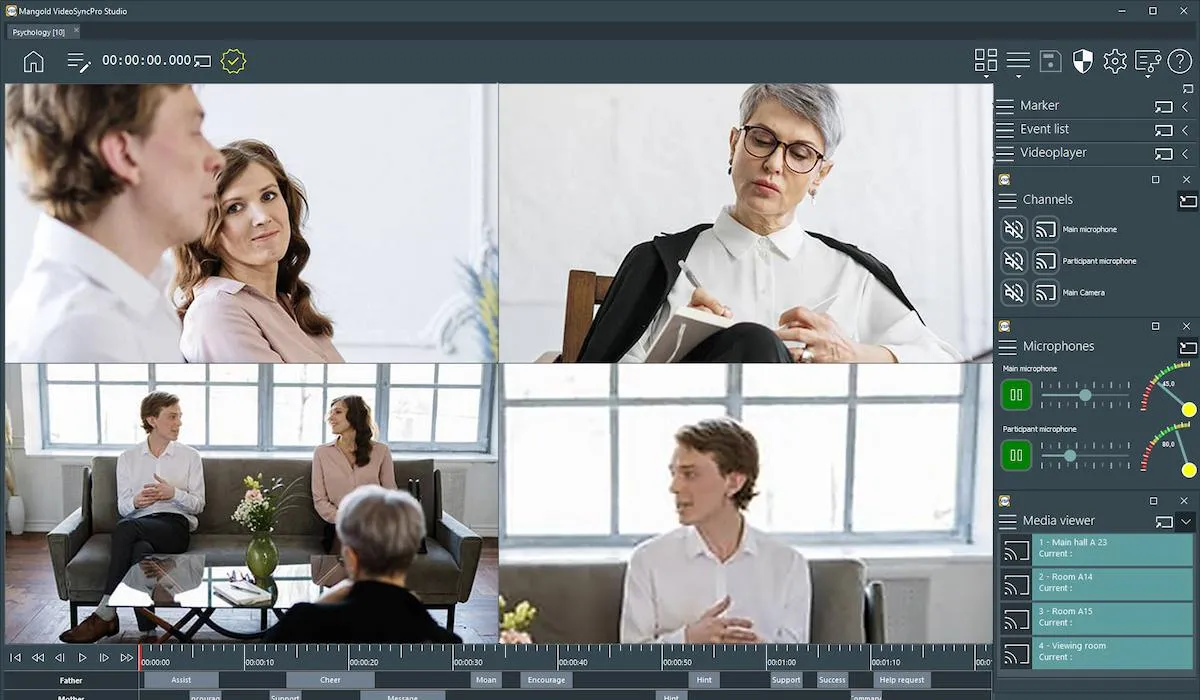

The seamless integration with Mangold VideoSyncPro and Mangold INTERACT allows for precise synchronization of behavioral and physiological measures, which is crucial for success with a multimodal approach. Through this integration and our shared commitment to unparalleled customer support, we will provide you with the most comprehensive solution for your research.

Learn more about who MindWare is and what they do by contacting a Mangold representative today.

Seamlessly Integrate Behavior Coding and Measuring Physiology with MindWare and Mangold Solutions

Combine a MindWare physiology recording system with the Mangold VideoSyncPro video recording software for synchronized capturing video and physiology.

Use Mangold INTERACT for behavior coding and in-depth analysis of your recorded video footage.

Success Story

Specific Risk Factor, Attentional Biases for Facial Displays of Emotion

The Project:

Much of Professor Dr. Brandon Gibb’s (Binghamton University, USA) research focuses on risk for the intergenerational transmission of depression.

In this study, they were interested in how one specific risk factor, attentional biases for facial displays of emotion, which is a known risk factor for depression, may develop in infants of mothers with major depressive disorder (MDD). They hypothesize that attentional avoidance of mom’s facial expressions, and sad faces more generally, develop because babies experience increased arousal and negative affect when looking at these faces, which is reduced when they shift their attention away, thereby negatively reinforcing the attentional avoidance.

The Challenge:

To really test the hypothesis, it was needed to time-lock the ECG signals to shifts in infants’ attention.

The Solution:

Using a MindWare physiology recording system with the Mangold VideoSyncPro video recording software for synchronized capturing video and physiology. Followed by an integrated analysis in Mangold INTERACT .

The Benefits:

- Frame-by-frame video coding with Mangold INTERACT

- Import of codes to MindWare software

- E.g., to check an infant’s average heart rate in the five seconds before and after a shift in attention away from their mother

The Feedback:

“The ability to integrate behavioral codes from Mangold INTERACT with physiological signals obtained with MindWare is perfect and exactly what we need for our project.”

Professor Dr. Brandon E. Gibb, Binghamton University, Department of Psychology, Director, Mood Disorders Institute, Binghampton, NY, USA

INTERACT: One Software for Your Entire Observational Research Workflow

From audio/video-based content-coding and transcription to analysis - INTERACT has you covered.