Product · 33 min read

Creating an Observational Research Lab

The impact of technology on behavioral research and the potential of modern observational research labs.

Creating an Observational Research Lab: Important Technical Background Information, Challenges, and Opportunities

1. The Change in Observational Research

Observational research is a key tool in scientific work in disciplines such as psychology, human-computer interaction, medicine, and ethology. In recent years, it has undergone a transformation. The trend is moving away from manual coding and subjective evaluation toward technology-supported methods. Driven by the need for greater precision and objectivity, as well as the analysis of complex behavioral patterns in realistic environments, modern research laboratories are increasingly relying on advanced sensor technology, specialized software, and powerful IT infrastructures. These technologies are no longer a supplement, but a prerequisite for systematically expanding the boundaries of what can be observed.

This article provides an overview of the technical fundamentals required to set up and operate a modern observational research laboratory. It highlights key aspects of multimodal data collection, including the sensors and modalities used, specialized software solutions, and communication protocols necessary for seamless data flow. Additionally, it presents advanced technologies that enable deeper insights into complex behavioral patterns. The article also discusses specific application fields, typical challenges and limitations, the types of data obtained, and common analysis methods. The article aims to provide a practical guide to exploiting the potential of next-generation observational research.

2. Core Themes in Modern Observational Research

The development of a state-of-the-art observational research lab hinges on several interconnected core themes, each contributing to the overall capability and efficiency of the research endeavor. These themes represent the fundamental pillars upon which robust and reliable data collection and analysis systems are built.

Behavior Research Labs: Beyond the Traditional

Modern behavior research labs are evolving beyond simple audio/video recording setups. They are becoming integrated environments where human and animal behavior can be observed, quantified, and analyzed in detail. This involves capturing not just overt actions, but also subtle physiological responses and cognitive states. The emphasis is on creating controlled yet ecologically valid settings that allow for the systematic study of behavior in response to specific stimuli or tasks.

Multimodal Research: A Holistic Perspective

At the heart of contemporary observational research is the concept of Multimodal Research. This approach involves the simultaneous collection of data from multiple distinct sources or modalities to provide a more comprehensive and holistic understanding of a phenomenon. For instance, studying user experience might involve combining eye-tracking data (where a user is looking), physiological responses (stress levels via GSR), and verbal protocols (what they are saying). The power of multimodal research lies in its ability to reveal correlations and interactions between different aspects of behavior and cognition that would be missed by single-modality studies. It allows to triangulate findings, validate observations across different data streams, and build richer, more nuanced models of complex processes.

Sensor Integration: The Interconnected Web

Effective Sensor Integration is paramount for successful multimodal data collection. This involves not only physically mounting and connecting diverse sensors but also ensuring their seamless operation and data synchronization. Challenges often arise from varying hardware interfaces, power requirements, and data output formats. A well-integrated system minimizes setup time, reduces potential points of failure, and ensures that all data streams are precisely aligned in time. This often necessitates custom hardware solutions, standardized cabling, and a centralized control system capable of managing multiple input devices simultaneously.

Lab Automation: Streamlining the Workflow

Lab Automation refers to the use of technology to streamline and standardize various aspects of the research workflow, from experiment setup and data collection to initial processing and storage. This can include automated calibration of sensors, programmed stimulus presentation, automated data logging, and even automated preliminary data quality checks. Automation reduces human error, increases efficiency, and ensures consistency across experimental trials and participants. It frees from tedious manual tasks, allowing to focus on higher-level analytical and interpretive work.

Real-Time Analysis: Immediate Insights

The capability for Real-Time Analysis is a significant advancement in observational research. Instead of waiting for post-hoc processing, researchers can monitor and analyze data as it is being collected. This is particularly valuable in adaptive experimental designs, where stimuli or task parameters might need to be adjusted based on a participant’s ongoing responses. Real-time analysis can provide immediate feedback on experimental conditions, identify potential issues with data quality, and even trigger automated interventions or events within the experimental setup. It requires robust computational power and efficient data pipelines capable of processing high-volume, high-velocity data streams with minimal latency.

Data Synchronization: The Temporal Alignment Challenge

Perhaps the most critical and technically demanding aspects of multimodal research is Data Synchronization. When collecting data from multiple sensors, each operating at its own sampling rate and potentially with its own internal clock, ensuring that all data points are precisely aligned in time is crucial for meaningful analysis. Even slight temporal misalignments (clock drift) can lead to erroneous conclusions, especially when examining rapid behavioral or physiological events. Achieving sub-millisecond synchronization across diverse data streams is a significant technical challenge that requires specialized hardware, software, and communication protocols.

IT Infrastructure: The Backbone of the Lab

A robust and scalable IT Infrastructure forms the backbone of any modern observational research lab. This includes high-performance computing resources for data acquisition and processing, high-capacity storage solutions for vast amounts of raw and processed data, and high-speed networking for efficient data transfer. Considerations for IT infrastructure extend to data security, backup strategies, and accessibility for multiple researchers. The choice between local servers, cloud-based solutions, or hybrid models depends on the scale of research, budget, and data sensitivity. Adequate IT infrastructure ensures data integrity, computational power for complex analyses, and collaborative research capabilities.

3. Sensor Types & Modalities: Capturing the Nuances of Behavior

The richness of data in an observational research lab stems directly from the diversity and precision of its integrated sensors. Each modality offers a unique window into different aspects of human or animal behavior and physiology, contributing to a comprehensive understanding of complex phenomena. The selection and deployment of these sensors require careful consideration of research questions, environmental constraints, and data integration capabilities.

Video Monitoring: The Foundation of Observational Data

Video Monitoring forms the bedrock of most observational research labs. High-resolution video cameras capture overt behaviors, interactions, and environmental cues, providing a rich qualitative and quantitative record. Modern video systems extend beyond simple recording, incorporating features such as infrared capabilities for low-light conditions, and on-premise synchronized audio capture. The quality of video data is paramount for subsequent behavioral coding and analysis, necessitating cameras with good low-light performance, and minimal latency. The choice of video format (e.g., MP4) and compression algorithms is also critical, balancing file size with data fidelity. Advanced video recording systems can also integrate with other sensors, overlaying additional information or visuals directly onto the video feed for identification, security, or GDPR compliance reasons.

Audio Recording: An Indispensable Source of Information.

Audio recordings are essential for understanding visually observed behavior. It provides essential context, enabling the comprehension of the underlying causes of a particular behavior. It can also provide valuable insight into thought processes, such as problem-solving, design creation, and conflict management. For this reason, having a clear audio recording is vital to most observational research projects. Recording high-quality audio requires a well-designed system, and it is not a simple task. Decisions regarding the mixing of audio feeds to video feeds and the recording of audio as a pure audio file require careful consideration.

Eye Tracking: Unveiling Attentional Processes

Eye Tracking technology provides valuable insights into visual attention, cognitive processing, and decision-making. By observing participants through audio and video recordings, one gains insight into their verbal and physical actions. However, the content of what they are observing remains concealed. The visual information perceived by the participant can offer valuable insights for comprehending the recorded audio and video content. By precisely measuring gaze direction, saccades, fixations, and pupil dilation, researchers can infer what an individual is looking at, for how long, and how their cognitive load or emotional state might be changing. Eye trackers come in various forms, including remote desktop-mounted systems, head-mounted wearable devices, and even integrated solutions within virtual reality (VR) headsets. Key technical considerations include accuracy (degree of visual angle error), precision (consistency of measurements), sampling rate (Hz), and robustness to head movements or varying lighting conditions. The integration of eye-tracking data with video recordings allows for the creation of heatmaps, gaze plots, and areas of interest (AOI) analysis, providing a quantitative measure of visual engagement and attentional allocation.

Biophysical Sensors: Probing Physiological Responses

Beyond overt behavior and visual attention, Biophysical Sensors enable the measurement of subtle physiological responses that often reflect underlying cognitive and emotional states. These sensors provide objective, continuous data streams that complement behavioral observations, offering a deeper understanding of internal processes. The integration of these diverse physiological signals is a hallmark of advanced multimodal research.

-

EEG (Electroencephalogram): EEG measures electrical activity in the brain, reflecting neural oscillations associated with various cognitive states (e.g., attention, memory, sleep) and event-related potentials (ERPs) in response to specific stimuli. EEG systems range from high-density research-grade setups with hundreds of channels to more portable, fewer-channel systems. Technical considerations include sampling rate (typically hundreds to thousands of Hz), impedance levels (for signal quality), and noise reduction techniques. EEG data provides millisecond-level temporal resolution, making it ideal for studying the precise timing of neural events.

-

HRV (Heart Rate Variability): HRV is a measure of the beat-to-beat variations in heart rate, reflecting the activity of the autonomic nervous system (ANS). Higher HRV is generally associated with better emotional regulation and cognitive flexibility, while lower HRV can indicate stress or fatigue. HRV is typically derived from ECG signals or photoplethysmography (PPG) sensors (e.g., from a finger clip or wearable). Analysis involves time-domain, frequency-domain, and non-linear methods to extract meaningful physiological insights.

-

ECG (Electrocardiogram): ECG measures the electrical activity of the heart, providing precise heart rate data and insights into cardiac function. It is a fundamental physiological measure often used in conjunction with other sensors to assess arousal, stress, and emotional responses. High-quality ECG signals are crucial for accurate HRV analysis.

-

GSR/EDA (Galvanic Skin Response/Electrodermal Activity): GSR, also known as EDA, measures changes in the electrical conductivity of the skin, which are directly related to sweat gland activity. Since sweat glands are innervated by the sympathetic nervous system, GSR serves as a sensitive indicator of physiological arousal, stress, and emotional responses. It is a non-invasive and widely used measure in psychological and behavioral research.

-

Respiration: Monitoring respiration patterns (rate, depth, and regularity) provides insights into physiological state, stress, and even cognitive load. Changes in breathing can be linked to emotional responses, attentional shifts, and effortful cognitive processing. Respiration can be measured using various methods, including respiratory belts, thermistors, or even indirectly through changes in chest impedance. Accurate respiration data is important for understanding physiological regulation and its interplay with other behavioral and cognitive measures.

Integrating these diverse biophysical signals requires specialized hardware interfaces, synchronized data acquisition systems, and robust software for processing and analysis. The ability to correlate these physiological markers with observed behaviors and self-report data provides a powerful, multi-dimensional view of human experience.

Mangold Observation Studio

The advanced software suite for sophisticated sensor data-driven observational studies with comprehensive data collection and analysis capabilities.

4. Software & Platforms: The Digital Backbone of Data Acquisition and Analysis

Beyond the physical sensors, the software and platforms employed in an observational research lab are the most critical part for orchestrating data collection, managing complex experimental designs, and facilitating subsequent analysis. These tools transform raw sensor outputs into meaningful, synchronized datasets, enabling researchers to extract insights from multimodal information streams. While many solutions exist, specialized platforms often offer integrated functionalities tailored for behavioral research.

Note

The key features and benefits of specialized research software for capturing and analyzing behavioral data are explained below using Mangold software solutions as examples. These are representative of other products, such as Noldus Observer or iMotions. However, a point-by-point comparison would not be very useful, as the products are constantly evolving and offer similar but not identical features. It is crucial to understand the details regarding the functionality and user experience of each product, as these can have a significant impact on the effectiveness and efficiency of data collection and analysis. Consequently, these elements have a significant influence on the overall value derived from the use of such software tools. Users must therefore discuss the suitability and effectiveness of the software for their specific research projects individually with the provider.

Mangold VideoSyncPro: Precision Video Recording and Scenario Control

Mangold VideoSyncPro is a dedicated software solution designed for audio/video recording and scenario control within observational research settings. Its primary strength lies in its ability to synchronize video capture from multiple cameras and audio streams, external events, markers and comments entered by the operator. This is crucial for studies requiring high temporal accuracy between all recorded data streams, such as AV observed behaviors and other physiological or environmental changes. Key features typically include:

- Multi-camera recording: Simultaneous capture from several video sources, allowing for comprehensive coverage of the research space and different perspectives on participant behavior.

- Multi-audio recording: Simultaneous capture from several audio sources, allowing for mixing audio feeds with video feeds, which is cruical for later analysis.

- Remote control of AV devices: Allowing the remote control of cameras (Pan/Tilt/Zoom) and activation/deactivation of microphones and their volume control during recording.

- External device synchronization: The ability to receive triggers or time-stamps from other sensors (e.g., eye trackers, physiological sensors) or experimental control systems, ensuring that all data streams are aligned as good as possible.

- Scenario control: Facilitating the automation of experimental protocols, such as presenting specific stimuli at predefined times or in response to participant actions, thereby increasing experimental control and reproducibility.

VideoSyncPro acts as a central hub for audio-visual data acquisition, providing the foundational audio/video record that can then be enriched with data from other modalities.

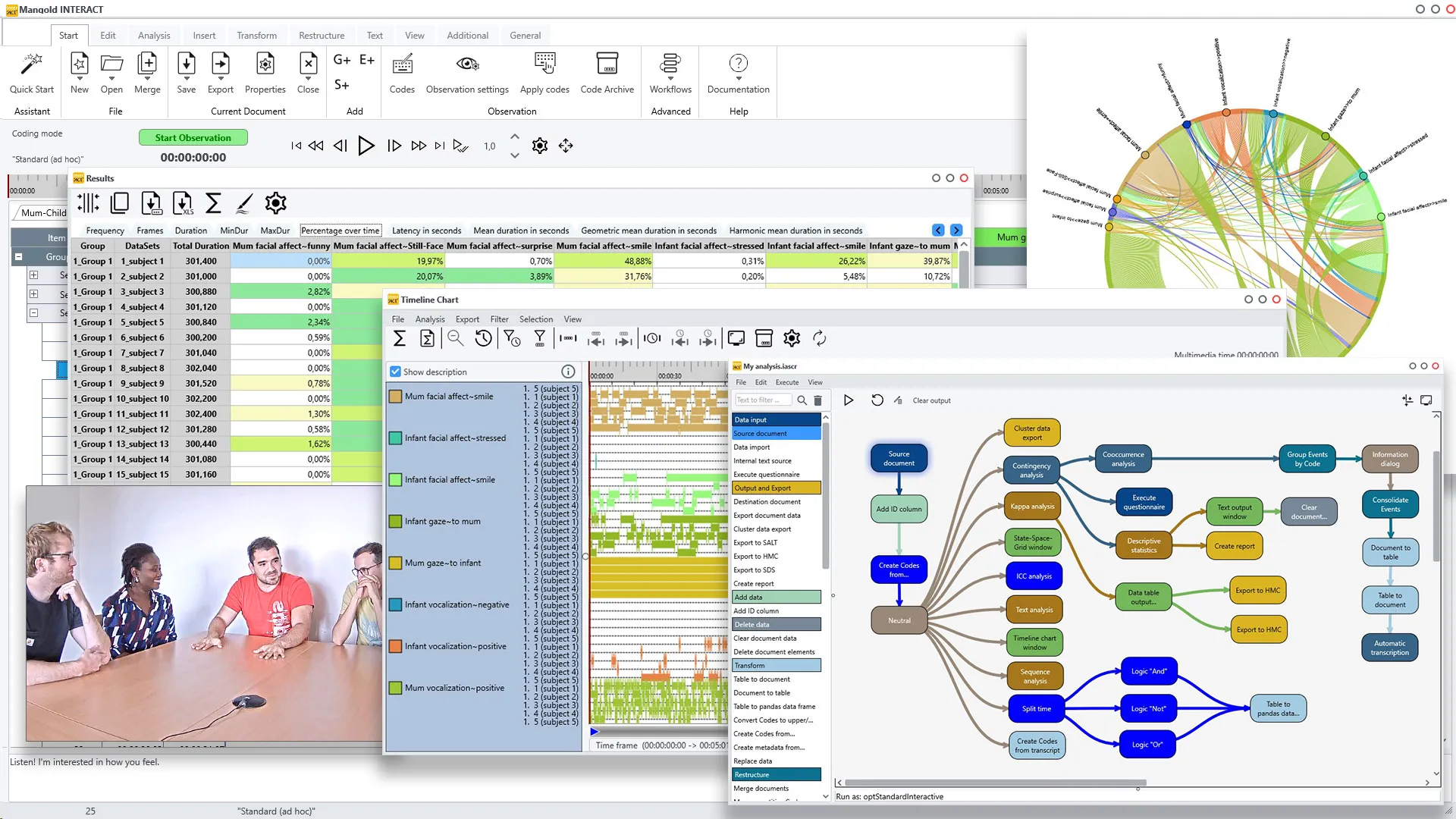

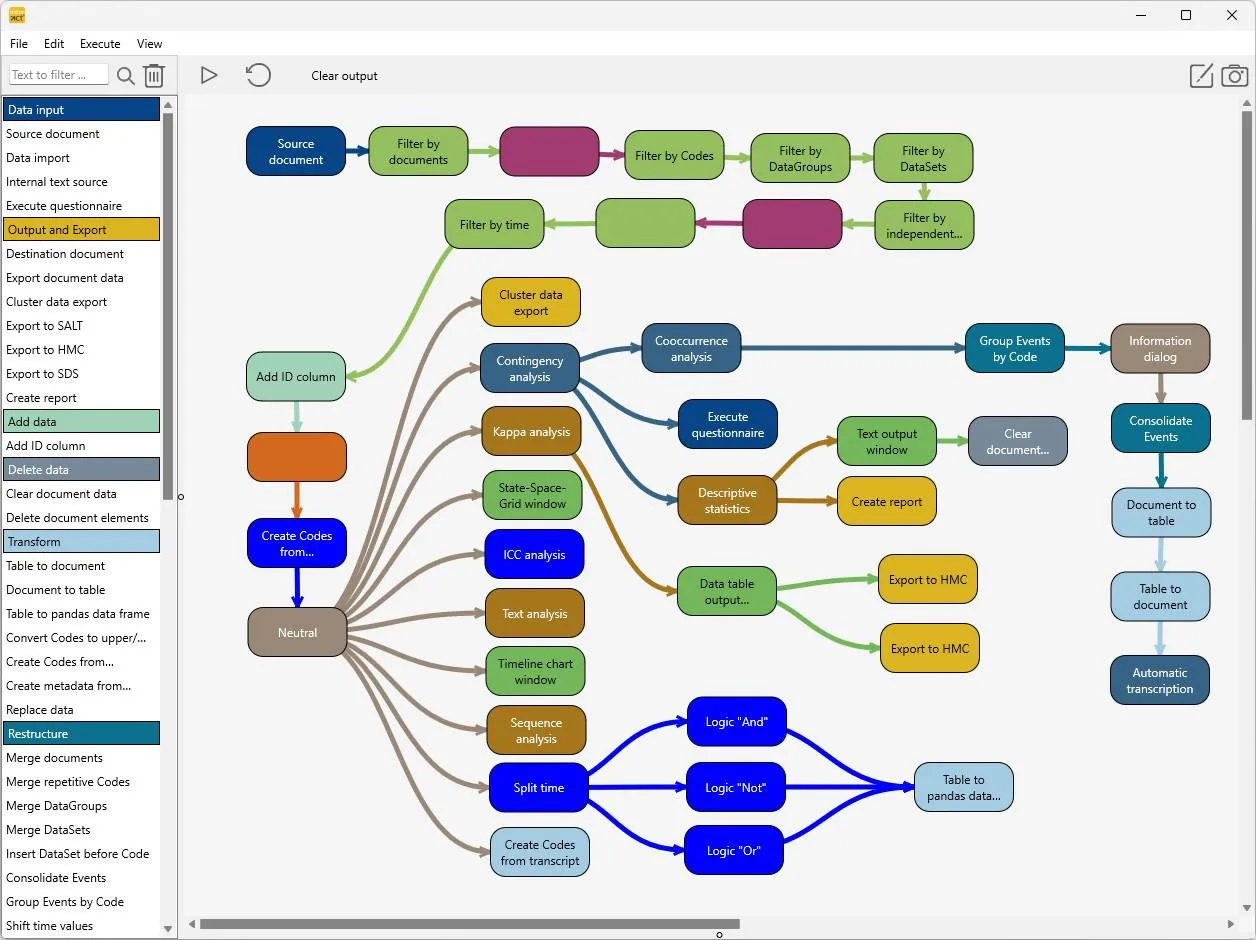

Mangold INTERACT: Advanced Audio/Video Coding and Data Analysis

Once audio/video data has been collected, the challenge shifts to making sense of it. Mangold INTERACT is a professional software platform for deductive and inductive data collection and qualitative analysis of multimedia files. It is designed for flexible and comfortable analysis of observations in a wide range of experiments, making it suitable for almost any kind of observational research. INTERACT represents a paradigm shift from simple data collection to sophisticated information mining, enabling researchers to extract insights that cannot be detected by pure observation alone, with the goal of transforming complex findings into quantitative results

Core Capabilities and Architecture:

INTERACT supports both inductive, explorative observation collection and deductive, coding system-based observations. The software can handle almost any kind of time-related observations or occurrences, with an impressive temporal range capability from 9999 BC to 9999 AD with a precision of 100 nanoseconds for each time-stamped information. This range and precision establish a new standard in observational research. This extensive temporal handling makes it suitable for historical data analysis as well as contemporary research. The platform offers descriptive statistics and powerful analysis routines, for example finding patterns of behavior, complemented by full Python integration for advanced custom analysis workflows without limits.

Video and Audio Processing:

One of INTERACT’s standout features is its ability to open and evaluate multiple videos simultaneously while maintaining perfect time-synchronization. This capability is crucial for multi-camera setups where researchers film scenes from different angles and need to evaluate these videos together. The software provides complete control over video playback, allowing to play and observe videos at almost any speed—from very fast to extremely slow, and even frame-by-frame navigation for precise data collection.

The audio processing capabilities are equally sophisticated. INTERACT can display soundtracks for each video, which proves invaluable in studies where audio information is essential. For example, in autism research where a participant might remain silent for extended periods before speaking, the audio visualization allows researchers to immediately identify and jump to speech occurrences rather than watching the entire video.

Coding System Architecture:

The software allows to define comprehensive coding or category systems with almost unlimited codes describing events or contents. INTERACT supports arbitrarily large category systems with multiple branches and hierarchy levels, enabling detailed analysis of complex behaviors. A notable example is the Facial Action Coding System (FACS), which describes the expression of each facial muscle. Due to INTERACT’s intelligent coding system structure, the entire FACS can be implemented with just three hierarchical levels.

Data Collection and Event Management:

The coding process in INTERACT is streamlined through keyboard shortcuts or mouse clicks while viewing videos. Importantly, data can be recorded even when the video is paused, providing flexibility for detailed analysis. The software stores all data as ‘events’, each containing a start time, end time, and almost any number of behavioral codes. This event-based structure forms the foundation for all subsequent analyses.

INTERACT supports extensive transcriptions, allowing almost unlimited text per event, and enables the assignment of additional data sources (PDFs, text documents, spreadsheets) to video sections via simple drag-and-drop functionality. This multimedia integration capability allows to create rich, contextual annotations that go beyond simple behavioral coding.

Data Structuring and Organization:

Events in INTERACT are recorded in groups and sets, enabling sophisticated data structuring during the logging process. Researchers can organize data by subjects, test situations, or almost any other relevant categorization. The software offers automated routines to group events or insert new datasets, providing almost unlimited flexibility in data organization both during and after collection.

The metadata capabilities are particularly robust, allowing users to define custom variables and value lists for datasets. This enables easy filtering and selection of specific subgroups (e.g., all female participants, specific age ranges, particular experimental conditions) for targeted analysis.

Advanced Analysis and Information Mining:

The true value of INTERACT lies in its information mining capabilities. Unlike traditional statistical programs that analyze data case-by-case, INTERACT’s timeline-based approach enables sophisticated temporal analysis. The software can perform several types of advanced analyses:

-

Descriptive Analysis: INTERACT answers fundamental questions about frequency, duration, and temporal relationships. For example speaking time distributions, question frequencies, or temporal relationships between different behavioral categories.

-

Co-Occurrence Analysis: The software can identify when and which different behaviors occur simultaneously, which would be cognitively difficult for human observers to detect in real-time. For instance, it can quantify how often emotionally motivated questions occur during visualization periods and calculate the time share of combined behaviors versus individual behaviors.

-

Static Interval Analysis: INTERACT can examine what happens statistically before or after specific events within defined time intervals. This might involve analyzing what occurs 3 seconds before an emotionally motivated question or 5 seconds after a particular behavioral event.

-

Contingency Analysis: The software analyzes contingent responses within specific time spans following events and examines how these patterns change with different variables. This enables researchers to understand behavioral sequences and their contextual dependencies.

-

Sequence and Pattern Analysis: INTERACT determines transition probabilities between behaviors and identifies stable or changing behavioral patterns across different conditions, subjects, or contexts. This capability is crucial for understanding behavioral dynamics and developing predictive models.

-

Quality Assurance and Reliability: INTERACT includes comprehensive tools for assessing inter-rater reliability, such as Cohen’s Kappa and Intra Class Correlation Coefficient (ICC). These statistical measures ensure the objectivity and reproducibility of behavioral observations, which is essential for scientific validity.

-

Integration and Extensibility: The software provides extensive integration capabilities, allowing import and display of data from various sensors (eye-tracking, physiological data) alongside video streams. This multimodal integration enables researchers to correlate behavioral events with physiological changes or gaze patterns, providing a comprehensive view of participant responses.

For advanced users, INTERACT offers a Workflow Editor with full Python integration, allowing to extend the software with custom data import, export, and evaluation functions. These custom functions can be called directly from the INTERACT menu bar, making advanced analyses accessible to entire research teams.

Advantages Over Alternative Solutions: INTERACT addresses several critical limitations of alternative approaches, including freeware video coding solutions. The most common and most severe issues arise when data needs to be reorganized in structure and coding for the ability to analyze unforeseen analytical questions, or for adding additional information. The most significant challenges associated with non-behavior-research-specific software are the flexibility of importing data created by third-party systems (e.g., transcripts or time-stamped event data) and the export of raw data for external manipulation and further processing. These problems often require time-consuming and error-prone manual data cleaning and reformatting, as well as the use of several software products, each designed for different applications.

Professional solutions like INTERACT prevent these issues through standardized workflows, consistent data structures, and most importantly - flexible data manipulation, import and export capabilities. The INTERACT software covers the entire observational research workflow from audio/video-based content-coding and transcription to comprehensive analysis, making it a complete solution for behavioral research laboratories.

INTERACT: One Software for Your Entire Observational Research Workflow

From audio/video-based content-coding and transcription to analysis - INTERACT has you covered.

Mangold Observation Studio: Comprehensive Multimodal Data Collection and Analysis

Mangold Observation Studio is a comprehensive, integrated solution for multimodal data collection and analysis. It often serves as an alternative, or as an add-on platform to VideoSyncPro and INTERACT, as it can record a wide array of sensors and manage the entire research workflow from experiment design to data export.

Its key advantages include:

- Centralized control: A single interface for managing and synchronizing multiple data streams, including video, audio, eye-tracking, and various biophysical sensors.

- Real-time monitoring: Allowing researchers to view synchronized data streams as they are being collected, enabling on-the-fly adjustments and quality checks.

- Integrated analysis environment: Providing tools for both qualitative coding and quantitative analysis within the same platform, reducing the need for data transfer between different software packages.

- Scalability: Designed to handle large volumes of multimodal data, making it suitable for complex studies with numerous participants and long recording durations.

Observation Studio aims to provide a seamless, end-to-end solution for observational research, minimizing the technical hurdles associated with multimodal data integration and analysis.

Which option is best suited for each situation?

The potential use of Mangold Observation Studio as either an alternative or an add-on to INTERACT and VideoSyncPro depends on the specific research setup and data collection and analysis requirements and needs to be discussed in detail.

A) Highly accurate audio/video recording: Mangold VideoSyncPro is the ideal solution for recording, plus Mangold INTERACT, which is the ideal solution for analysis when a research lab requires highly accurate audio/video recording from multiple audio/video devices.

B) Research focusing on sensor data: If the research focuses on recording various sensor data streams in a time-synchronized manner and audio/video is merely an additional feature (e.g. screen recording, users facial expressions), Mangold Observation Studio is the optimal choice.

C) Sensor data and Audio/Video equally important: If ensuring the synchronized recording of various sensor data streams is as crucial as capturing high-quality multiple audio and video streams, all three software solutions can be seamlessly integrated.

Mangold Observation Studio

The advanced software suite for sophisticated sensor data-driven observational studies with comprehensive data collection and analysis capabilities.

Integration Considerations: Beyond Proprietary Solutions

Researchers often work with a diverse ecosystem of hardware and software from different vendors. Therefore, understanding the principles of Integration Considerations is vital. This involves:

- Open standards and APIs: Prioritizing sensors and software that support open communication protocols (e.g., LSL, UDP/IP) or provide well-documented Application Programming Interfaces (APIs) for custom integration.

- Modular design: Building a lab infrastructure that allows for the flexible addition or removal of sensors and software components without disrupting the entire system.

- Data format compatibility: Ensuring that data can be easily imported and exported between different software packages, often requiring conversion scripts or middleware.

- Custom scripting and programming: Leveraging languages like Python or MATLAB to develop custom scripts for data acquisition, processing, and synchronization when off-the-shelf solutions are insufficient.

Before making a purchase!

The digital backbone of a modern observational research lab is formed by effective software and platform selection, coupled with a strategic approach to integration. This enables the capture and analysis of rich, multimodal behavioral data. Integrated solutions, such as the Mangold software products previously mentioned, meet these requirements, providing a significant advantage over products with less flexibility or integrated functionality. This is a crucial aspect for the effectiveness and value of outcomes of research projects, and thus needs to be carefully discussed and understood by the researcher prior to purchasing any software or equipment.

5. Protocols & Communication Standards: Ensuring Seamless Data Flow and Synchronization

In a multimodal observational research lab, the ability of diverse hardware and software components to communicate effectively and synchronize their data streams is paramount. This is achieved through the adherence to specific protocols and communication standards, which dictate how data is formatted, transmitted, and time-stamped. Without robust communication, the rich, multi-layered data collected from various sensors would be fragmented and difficult to integrate meaningfully.

Lab Streaming Layer (LSL): A Universal Data Bus for Research

Lab Streaming Layer (LSL) has emerged as a standard for real-time data exchange in neuroscience and psychological research. LSL provides a unified framework for sending and receiving time-series data streams from various sources (e.g., EEG, eye-tracker, motion capture) to various destinations (e.g., recording software, analysis scripts) over a local network. Its key advantages include:

- Hardware and software agnostic: LSL is designed to work with virtually almost any type of sensor or software, as long as a compatible LSL outlet (sender) and inlet (receiver) are implemented. This flexibility is crucial for integrating heterogeneous systems.

- Time synchronization: LSL incorporates robust mechanisms for time synchronization, ensuring that data from different streams can be accurately aligned. It uses a high-precision clock and provides tools for estimating and correcting for network latency and clock drift between devices.

- Metadata handling: LSL streams carry rich metadata, including channel names, sampling rates, data types, and device information, which is essential for proper data interpretation and analysis.

- Real-time capabilities: LSL is optimized for low-latency data transmission, making it suitable for real-time applications such such as biofeedback, neurofeedback, or closed-loop experimental designs.

LSL simplifies the complex task of integrating multiple data streams, allowing researchers to focus more on experimental design and data analysis rather than low-level communication protocols.

Note

In environments with many LSL streams, it is essential that the receiving software is mature and well developed. This is due to the substantial network load, which increases the probability of unexpected delays and alterations in the sequence of data packets. As a result, it becomes challenging to display and store the data in a time-synchronized manner. The Mangold Observation Studio is aware of these issues and has implemented a wide variety of strategies to ensure the highest possible precision related to the hardware and software specifications.

UDP/IP and TCP/IP: Fundamental Network Protocols

Underlying many communication systems in a research lab are the fundamental internet protocols: UDP/IP (User Datagram Protocol/Internet Protocol) and TCP/IP (Transmission Control Protocol/Internet Protocol). These protocols govern how data packets are sent across networks.

- UDP/IP: UDP is a connectionless protocol, meaning it does not establish a persistent connection between sender and receiver before transmitting data. It is faster and has lower overhead than TCP, making it suitable for applications where speed is critical and some data loss can be tolerated, such as real-time streaming of sensor data (e.g., video frames, high-frequency physiological signals). While UDP doesn’t guarantee delivery or order, its efficiency makes it valuable for high-throughput, low-latency data transmission.

- TCP/IP: TCP is a connection-oriented protocol that establishes a reliable, ordered, and error-checked connection between applications. It guarantees that data packets are delivered in the correct order and without loss, retransmitting any lost or corrupted packets. This reliability makes TCP/IP ideal for applications where data integrity is paramount, such as transmitting experimental commands, important sensor data, configuration files, or critical event markers that must not be missed.

Many lab systems utilize a combination of these protocols, leveraging UDP for high-speed data streams and TCP for control signals and critical information.

IEEE 1588 PTP (Precision Time Protocol): The Gold Standard for Time Synchronization

For applications demanding the highest levels of time synchronization accuracy, particularly across multiple networked devices, IEEE 1588 PTP (Precision Time Protocol) is the gold standard. PTP is a protocol designed to synchronize clocks throughout a computer network with high precision, often achieving sub-microsecond accuracy. This is critical in observational research where events across different sensors need to be aligned with extreme temporal fidelity (e.g., correlating a specific neural spike with a precise eye movement).

Unlike simpler time synchronization protocols (like NTP), PTP operates by exchanging time-stamped messages between a master clock and slave clocks, allowing the slave clocks to precisely adjust their internal time to match the master. It accounts for network delays and asymmetries, providing a much higher level of accuracy. Implementing PTP often requires specialized network hardware (e.g., PTP-enabled switches) and network interface cards, but for research requiring the utmost temporal precision, it is an indispensable technology. The ability to achieve such fine-grained time alignment across all data streams is a significant technical achievement that underpins the validity of complex multimodal analyses.

6. Technological Features & Concepts: Enhancing Observational Research Capabilities

The integration of advanced hardware, sophisticated software, and robust communication protocols enables a suite of powerful technological features and concepts that significantly enhance the capabilities of an observational research lab. These features move beyond mere data collection, facilitating deeper analysis, more dynamic experimental designs, and ultimately, richer scientific insights.

Event Triggering: Precision in Experimental Control

Event Triggering refers to the mechanism by which specific events or stimuli are initiated within an experiment, often in precise temporal relation to other data streams. This can involve sending signals from a central experimental control system to external devices (e.g., presenting an image on a screen, playing an audio cue, activating a haptic feedback device) or receiving signals from sensors (e.g., a button press, a specific physiological response) to mark their occurrence. The precision of event triggering is crucial for accurate time synchronization and for establishing causal relationships between stimuli, responses, and internal states. Modern systems often use dedicated hardware triggers (e.g., TTL pulses) or network-based triggers (e.g., LSL markers) to ensure sub-millisecond accuracy, allowing for fine-grained analysis of reaction times and event-related potentials.

Video Coding: Quantifying Behavior from Visual Data

Video Coding, also known as behavioral coding or annotation, is the systematic process of transforming continuous video recordings of behavior into discrete, quantifiable data points. Researchers define specific behaviors (e.g., gaze aversion, vocalization, specific motor actions) and then systematically mark their onset, offset, and duration on the video timeline. This process can be manual, semi-automated, or fully automated. Manual coding, while labor-intensive, allows for nuanced interpretation. Semi-automated tools can assist by detecting certain features (e.g., facial expressions, body posture) that coders then refine. The output of video coding is a structured dataset that can be analyzed statistically, allowing researchers to quantify behavioral frequencies, durations, and sequences. The reliability of video coding is often assessed using inter-rater agreement metrics (e.g., Cohen’s Kappa), ensuring the objectivity and reproducibility of the observations.

Data Fusion: Integrating Disparate Information

Data Fusion is the process of combining data from multiple disparate sources to produce a more consistent, accurate, and useful representation of a phenomenon than could be achieved by using any single data source alone. In an observational lab, this involves integrating data from video, audio, eye trackers, and various biophysical sensors. The goal is to create a unified dataset where all measurements are precisely aligned in time and space. Data fusion techniques can range from simple concatenation of time-series data to more complex statistical or machine learning approaches that identify patterns and relationships across modalities. Effective data fusion is essential for multimodal research, enabling researchers to explore complex interactions between different aspects of behavior and physiology.

Time Synchronization: The Cornerstone of Multimodal Analysis

While mentioned previously as a challenge, Time Synchronization is also a critical technological concept that underpins all multimodal observational research. It refers to the process of ensuring that all data streams, originating from different sensors and recording devices, are precisely aligned to a common timeline. Without accurate time synchronization, correlating events across modalities (e.g., a specific physiological response with a particular behavioral action) becomes impossible or highly unreliable. Techniques range from hardware-based solutions (e.g., shared clock signals, PTP) to software-based methods (e.g., LSL’s internal clock correction algorithms, post-hoc alignment based on shared event markers). Achieving and maintaining high-precision time synchronization is a continuous technical challenge that requires careful planning and robust implementation.

Real-Time Event Detection: Dynamic Insights

Real-Time Event Detection involves identifying specific occurrences or patterns within data streams as they happen, rather than waiting for post-hoc analysis. This capability is particularly valuable for adaptive experimental designs, where the experimental paradigm needs to respond dynamically to a participant’s behavior or physiological state. For example, a system might detect a sudden increase in GSR (indicating arousal) and automatically trigger a new stimulus presentation. Real-time event detection relies on efficient algorithms and computational resources capable of processing high-volume data streams with minimal latency. It opens up possibilities for closed-loop experiments, biofeedback applications, and immediate feedback on experimental conditions.

Sensor Fusion: Beyond Simple Integration

Building upon data fusion, Sensor Fusion refers to the process of combining data from multiple sensors to improve the accuracy, completeness, or reliability of the information. While data fusion is about bringing data together, sensor fusion often involves more sophisticated algorithms that leverage the complementary strengths of different sensors to create a more robust measurement. For example, combining data from an accelerometer and a gyroscope can provide a more accurate estimate of head movement than either sensor alone. In an observational lab, sensor fusion might involve combining eye-tracking data with head-mounted display orientation to precisely determine gaze direction in a dynamic environment, or integrating motion capture data with video to refine behavioral annotations.

Closed-Loop Systems: Responsive Experimental Paradigms

Closed-Loop Systems are experimental setups where the output of a system (e.g., a participant’s physiological response or behavior) feeds back into the system to modify its input (e.g., stimulus presentation). This creates a dynamic, interactive experimental environment that can adapt to the participant in real-time. Examples include neurofeedback systems where brain activity is presented to the participant to learn self-regulation, or adaptive learning environments that adjust difficulty based on performance. Implementing closed-loop systems requires robust real-time data acquisition, processing, and event triggering capabilities, along with sophisticated algorithms to determine the appropriate feedback or intervention.

AI-Assisted Transcription: Automating Qualitative Data Analysis

The advent of artificial intelligence (AI) is revolutionizing the processing of qualitative data in observational research. AI-Assisted Transcription utilizes speech-to-text algorithms to automatically convert audio recordings of verbal protocols, interviews, or spontaneous speech into written text. This significantly reduces the labor-intensive process of manual transcription, making large-scale qualitative data analysis more feasible. While AI transcription is not yet perfect and often requires human review and correction, it provides a valuable first pass, allowing researchers to quickly search, analyze, and code verbal data. Further AI applications extend to automated behavioral recognition from video, sentiment analysis from speech, and even automated event detection.

GPU-Powered Processing: Accelerating Data Analysis

The sheer volume and complexity of multimodal data often necessitate significant computational power. GPU-Powered Processing (Graphics Processing Unit) leverages the parallel processing capabilities of GPUs to accelerate computationally intensive tasks such as video processing, machine learning algorithms, and complex statistical modeling. GPUs are particularly well-suited for tasks that can be broken down into many smaller, independent computations, such as image recognition, neural network training, and signal processing. Integrating GPU-powered workstations or servers into the lab’s IT infrastructure can dramatically reduce data processing times, enabling faster turnaround for analysis and iteration on research questions.

Latency Management: Minimizing Delays in Real-Time Systems

Latency Management is a critical concern in any real-time observational system. Latency refers to the delay between an event occurring (e.g., a participant’s response, a sensor reading) and that event being registered, processed, or acted upon by the system. In closed-loop experiments or real-time analysis, high latency can compromise the validity of the experiment or the effectiveness of the intervention. Effective latency management involves optimizing hardware (e.g., high-speed data buses, dedicated processing units), software (e.g., efficient algorithms, optimized code), and network configurations (e.g., low-latency protocols, direct connections) to minimize delays. Understanding and quantifying latency across the entire data pipeline is essential for designing robust real-time systems.

Semantic Event Layers: Adding Meaning to Data

Semantic Event Layers involve adding meaningful, human-interpretable labels and annotations to raw data streams. While sensors capture raw physical signals, semantic event layers transform these signals into higher-level, conceptually rich information. For example, raw motion capture data might be translated into semantic events like “walking,” “sitting,” or “reaching.” Similarly, physiological data might be annotated with semantic labels like “stress response” or “cognitive effort.” These layers are often created through a combination of manual coding, rule-based algorithms, and machine learning models. Semantic event layers facilitate easier interpretation, enable more sophisticated queries and analyses, and bridge the gap between raw data and theoretical constructs, making the data more accessible and useful for a wider range of research questions.

7. Conclusion: The Future of Observational Research

The landscape of observational research is rapidly evolving, driven by advancements in sensor technology, computational power, and data science methodologies. Creating a modern observational research lab is a complex undertaking, requiring careful consideration of technical infrastructure, software integration, communication protocols, and data management strategies. However, the challenges are far outweighed by the opportunities to gain unprecedented insights into human and animal behavior, cognition, and physiology.

Future Directions: Towards More Integrated and Intelligent Labs

The future of observational research labs points towards even greater integration and intelligence. We can anticipate:

- Miniaturization and Wearables: Smaller, less intrusive sensors and wearable devices will enable more naturalistic data collection in a wider range of environments, moving research out of the lab and into everyday life.

- Advanced AI and Machine Learning: AI will play an increasingly central role, not just in data analysis but also in real-time event detection, automated experimental control, and even generating hypotheses from complex datasets.

- Virtual and Augmented Reality Integration: VR/AR will offer highly controlled yet immersive environments for studying behavior, allowing for precise manipulation of stimuli and contexts while capturing rich multimodal data.

- Cloud-Based Infrastructure: Greater reliance on cloud computing for data storage, processing, and collaborative analysis will enhance scalability, accessibility, and data sharing across research institutions.

- Ethical AI and Data Privacy: As data collection becomes more pervasive and sophisticated, increased attention will be paid to ethical considerations, data privacy, and the responsible use of AI in behavioral research.

Key Recommendations for Building a Modern Lab

For researchers embarking on the journey of building or upgrading an observational research lab, several key recommendations emerge:

- Prioritize Interoperability: Invest in hardware and software that support open standards and provide robust APIs to minimize compatibility issues and ensure future-proofing.

- Focus on Time Synchronization: Recognize that precise time alignment is the bedrock of multimodal analysis and invest in technologies like LSL and PTP to achieve it.

- Plan for Data Management: Develop comprehensive strategies for data storage, backup, and organization from the outset, considering the volume and variety of data generated.

- Embrace Automation: Leverage automation tools for experiment control, data acquisition, and preliminary processing to enhance efficiency and reduce human error.

- Invest in specialized software: Sensor hardware, audio/video devices, and computing power represent only one aspect of the equation. Specialized software for capturing audio/video data and sensor data is crucial for creating reliable data sets for further analysis.

- Select solutions that ensure long-term viability: Invest in solutions that are future-proof, modularly expandable, and enable open exchange of RAW data.

- Ensure that you get the support you need: It is important to select tools that come from a source that provides substantial support in overcoming technical challenges, can be adapted to changing research requirements, and offers guidance on research design, data collection, and analysis. Open-source and freeware tools may not be able to provide you with solutions and assistance in the time you need.

- Store your data in a tool chain: Invest in a tool chain that can truly transfer data seamlessly between tools to avoid error-prone and time-consuming manual data processing.

- Choose the right tools: Choose the tools that ultimately generate answers to your research questions without requiring n+1 different manual data transformations.

Final Thoughts on Opportunities: Unlocking New Discoveries

Investing in advanced observational research infrastructure ultimately leads to significant advancements in our understanding of the subject. By overcoming the technical challenges, researchers can ask more nuanced questions, capture more comprehensive data, and uncover deeper insights into the complexities of behavior and the human mind. From optimizing user experiences and advancing clinical interventions to understanding fundamental cognitive processes, a modern observational research lab is a powerful engine for scientific progress. This progress promises a future where our understanding of behavior is more precise, objective, and holistic than ever before.

Mangold Observation Labs

Mangold Observation Labs are comprehensive turn-key solution for conducting behavioral research and observation.

- Behavioral research software

- Bias reduction

- Content analysis

- Data analysis

- Data collection

- Data integration platforms

- Ethics

- Ethnographic research

- Experiment

- Falsifiability

- Grounded theory

- Mixed-methods research

- Observational coding software

- Qualitative

- Quantitative

- Replicability

- Survey

- Video analysis systems